I recently built a fun and interactive web app that reacts to your face movement using animation. Let me take you through how I did it — from using Rive for animations to getting live face data using a camera and sending it through an ESP8266 to the browser using the Serial Web API.

Animation with Rive – The Magic Behind the Motion

I used Rive to create and control the animations in the web app. Rive is a powerful tool for designing real-time animations that can run across many platforms, including:

You can create animations in the Rive Editor, which feels familiar if you're already using Figma or After Effects. It’s easy to design, rig, and animate graphics.

Rive State Machine – Making the Animation Smart

Rive supports something called a State Machine — this is like giving logic to your animation.

In my project, the animation moves based on x and y values. These values represent a person’s face position. When the x/y changes, the animation moves to match. For example:

- If x = left → animation moves left

- If y = up → animation moves up

This gave my animation a dynamic and interactive behavior — it feels alive!

Getting Face Data Using Grove Vision AI Module

To detect the position of a person’s face, I used a Grove Vision AI Camera v2. This camera can detect faces and give a bounding box — from that, we can get the x and y coordinates of the face.

Now I had real-time data of where the person’s face was!

Sending Data with ESP8266

I needed to send this face position data (x and y) to my web app. So I connected the Grove camera to an ESP8266 — a small WiFi-enabled microcontroller. The ESP8266 reads the x and y values from the Grove and sends them over a serial port.

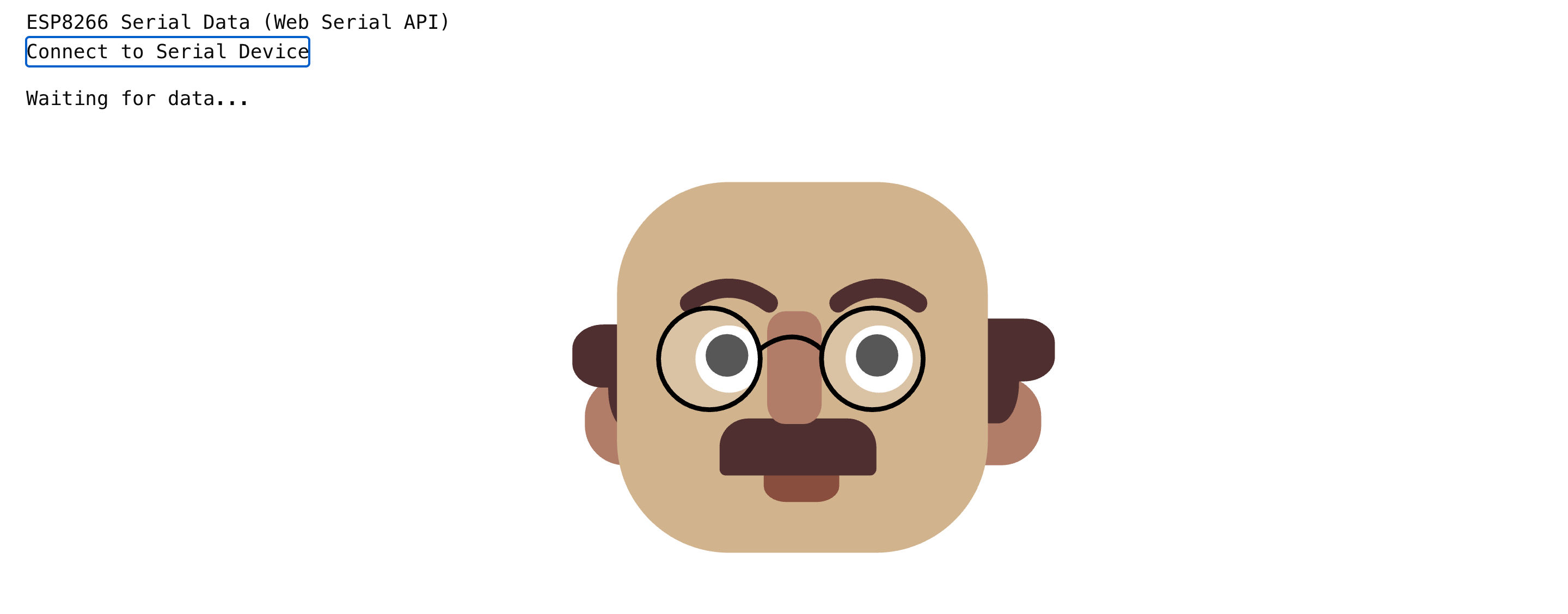

Reading Data in the Web App – Serial Web API

Now the final part: getting the data into my browser.

I used the Serial Web API, a feature in modern browsers that allows web apps to read data directly from devices connected via USB (like our ESP8266).

In the code:

- I opened the serial port

- Read the incoming x and y data

- Passed it into the Rive animation using its runtime API

Now, as your face moves in front of the camera, the animation moves in real time 🎯

TL;DR – How It Works

- Animation made in Rive, controlled using x/y

- Face tracking via Grove Vision AI gives x/y

- ESP8266 sends x/y over USB

- Web App uses Serial Web API to read the data

- Rive animation moves live with your face 🎭